目次

作業内容

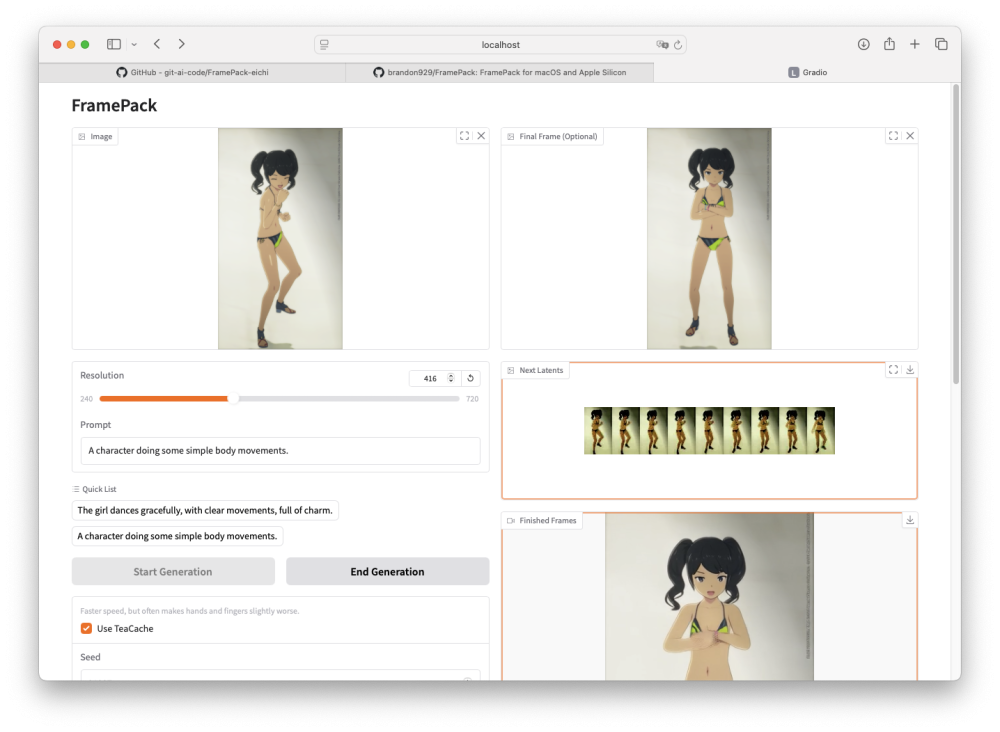

FramePackで画像間フレーム補完による動画作成を行うため、FramePack-eichiの処理を移植する

[前回作業]の続き

作業手順

1. 仮装環境の開始

ターミナルから下記のコマンド操作を行う

taiyos@mac-studio ~ % cd A.I./image

taiyos@mac-studio image % . venv/bin/activate2. demo_gradio.pyの変更

ターミナルから下記のコマンド操作を行う

(venv) taiyos@mac-studio image % cd FramePack

(venv) taiyos@mac-studio FramePack % cp -a demo_gradio.py demo_gradio.py.20250707

(venv) taiyos@mac-studio FramePack % vim demo_gradio.py

(venv) taiyos@mac-studio FramePack % diff demo_gradio.py.20250707 demo_gradio.py

106c106

< def worker(input_image, prompt, n_prompt, seed, total_second_length, latent_window_size, steps, cfg, gs, rs, gpu_memory_preservation, use_teacache, mp4_crf, resolution, lora_file, lora_multiplier):

---

> def worker(input_image, end_frame, prompt, n_prompt, seed, total_second_length, latent_window_size, steps, cfg, gs, rs, gpu_memory_preservation, use_teacache, mp4_crf, resolution, lora_file, lora_multiplier):

167a168,177

> # Final Flame(Optional)

> if end_frame is not None:

> end_frame_np = resize_and_center_crop(end_frame, target_width=width, target_height=height)

> Image.fromarray(end_frame_np).save(os.path.join(outputs_folder, f'{job_id}_end.png'))

> end_frame_pt = torch.from_numpy(end_frame_np).float() / 127.5 - 1

> end_frame_pt = end_frame_pt.permute(2, 0, 1)[None, :, None]

> end_frame_latent = vae_encode(end_frame_pt, vae)

> else:

> end_frame_latent = None

>

234a245

> is_first_section = True

237a249,251

>

> print(f'latent_padding_size = {latent_padding_size}')

> print(f'is_first_section = {is_first_section}, is_last_section = {is_last_section}')

238a253,257

> if is_first_section:

> is_first_section = False

> if end_frame_latent is not None:

> history_latents[:, :, 0:1, :, :] = end_frame_latent

>

243,244d261

< print(f'latent_padding_size = {latent_padding_size}, is_last_section = {is_last_section}')

<

356c373

< def process(input_image, prompt, n_prompt, seed, total_second_length, latent_window_size, steps, cfg, gs, rs, gpu_memory_preservation, use_teacache, mp4_crf, resolution, lora_file, lora_multiplier):

---

> def process(input_image, end_frame, prompt, n_prompt, seed, total_second_length, latent_window_size, steps, cfg, gs, rs, gpu_memory_preservation, use_teacache, mp4_crf, resolution, lora_file, lora_multiplier):

364c381

< async_run(worker, input_image, prompt, n_prompt, seed, total_second_length, latent_window_size, steps, cfg, gs, rs, gpu_memory_preservation, use_teacache, mp4_crf, resolution, lora_file, lora_multiplier)

---

> async_run(worker, input_image, end_frame, prompt, n_prompt, seed, total_second_length, latent_window_size, steps, cfg, gs, rs, gpu_memory_preservation, use_teacache, mp4_crf, resolution, lora_file, lora_multiplier)

434a452

> end_frame = gr.Image(sources='upload', type="numpy", label="Final Frame (Optional)", height=320)

443c461

< ips = [input_image, prompt, n_prompt, seed, total_second_length, latent_window_size, steps, cfg, gs, rs, gpu_memory_preservation, use_teacache, mp4_crf, resolution, lora_file, lora_multiplier]

---

> ips = [input_image, end_frame, prompt, n_prompt, seed, total_second_length, latent_window_size, steps, cfg, gs, rs, gpu_memory_preservation, use_teacache, mp4_crf, resolution, lora_file, lora_multiplier]3. FramePackの起動

ターミナルから下記のコマンド操作を行う

(venv) taiyos@mac-studio FramePack % python demo_gradio.pyWEBブラウザから下記URLを開く

http://localhost:7860

4. 終了

Ctrl+Cキーでプログラム停止後、ターミナルから下記のコマンド操作を行う

(venv) taiyos@Mac FramePack % deactivate